Apple’s Visual Look Up feature has been steadily improving over the past few years, but in iOS 26 it takes a big leap forward under the new name Visual Intelligence. The most noticeable change? It now kicks in the moment you take a screenshot, allowing you to interact with on-screen content in new ways.

(You can still access Visual Intelligence via the camera feed in the usual ways).

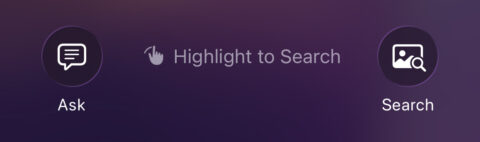

After capturing a screenshot by pressing the power and volume up buttons simultaneously, you’ll notice two new buttons appear in the screenshot editing view: Ask and Image Search. These are part of a redesigned screenshot interface that can analyze the contents of the image and offer useful actions based on what it sees.

The Ask button sends your screenshot – along with a typed query – to ChatGPT, letting you ask follow-up questions about what’s on screen. The Image Search button, meanwhile, sends the image to Google to find visually similar results – handy if you spot a product, place, or outfit you want to track down online. You’ll also get prompts to search via places like Etsy and Pinterest if your device deems them relevant to the contents of the image.

You can even draw around the part of the image you’re interested in to get more specific results.

But even without pressing those buttons, Visual Intelligence is working in the background. It can detect useful content in your screenshots and offer context-specific suggestions. For example, if you screenshot an event invite, you might see a one-tap option to add it to your calendar. If you grab a screenshot of a product or item, you may be prompted with shopping results from the web. The usual features for identifying plants, animals, landmarks, and artworks from photos are still here, too.

It’s worth noting that while the detection happens locally, using Ask or Image Search means sharing your screenshot with external services – OpenAI and Google, respectively. So you’ll want to avoid using those options on images containing any sensitive or personal information.