Content warning: child abuse

Apple has expanded on some of the automated child protection features coming to iOS 15 in the US later this year, including explicit image warnings in Messages, safety guidance in Siri and Search, and iCloud photo scanning to detect abuse.

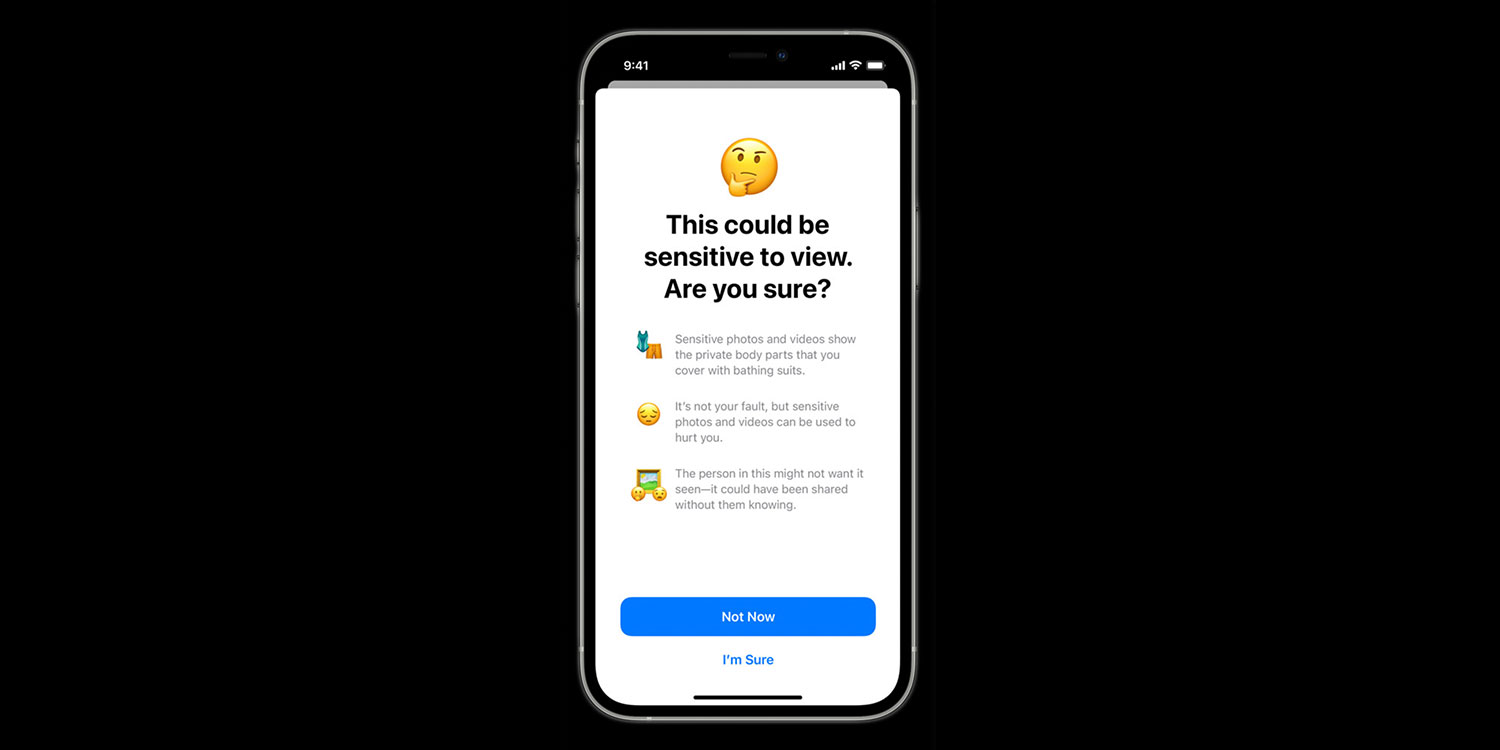

That first feature will use machine learning to detect sexually explicit images sent or received in the Messages app by children and automatically blur them. They will be shown messaging explaining the dangers of sharing sensitive imagery before deciding whether or not to unblur the image. For kids aged 12 and under, parents can activate a feature that notifies them if a child decides to view sensitive material.

The second feature relates to an automated intervention when using Siri or Search to look for harmful or problematic terms. This guidance will give information on why the topic is dangerous and provide links to additional resources and support if needed.

But it’s the third feature, the automated photo scanning, in particular, that has caused a stir. The Financial Times first reported on it, with pundits worried that such a feature could fly in the face of Apple’s privacy ideals – or even be misused “in the hands of an authoritarian government.”

The feature is designed to scan a user’s photo library for instances of Child Sexual Abuse Material, or “CSAM,” and grant Apple access to those files if enough instances are found.

As it stands, iOS is designed so nobody – even Apple itself – can access any of your files without knowing your password. This philosophy was the basis of a spat with the FBI a few years back, in which Apple refused to build a “backdoor” into iOS for criminal investigations.

That’s why many consumers are worried, despite the noble intention of fighting child abuse. Apple has a history of putting customer privacy above all else, and this move would make it “trivial” for Apple to scan user photos based on other criteria, at the request of governments around the world.

It’s a difficult issue, and executed badly it could be the start of a slippery slope that sees iOS become more vulnerable and unsolicited photo-scanning more and more invasive.

But Apple’s rebuttal is a detailed one that lays down the facts about how the system would actually work, saying its CSAM scanning is performed securely on-device and the results are secret and anonymous until the point that they exceed a certain threshold – at which point Apple is notified and a team of humans are granted access to double-check the photos.

Apple says “the threshold is set to provide an extremely high level of accuracy and ensures less than a one in one trillion chance per year of incorrectly flagging a given account.”

“By design, this feature only applies to photos that the user chooses to upload to iCloud Photos, and even then Apple only learns about accounts that are storing collections of known CSAM images, and only the images that match to known CSAM. The system does not work for users who have iCloud Photos disabled. This feature does not work on your private iPhone photo library on the device.”

Put simply, a mindless algorithm will perform these checks automatically, and only if the system is extremely certain it has detected serious abuse will Apple get involved.

There has been a lot of inaccurate reporting and misunderstanding on this topic – and it’s a weighty topic – so if it’s of interest to you, we’d urge you to read about these features direct from the horse’s mouth and make your own minds up. Apple has a detailed explainer about how all this will work, along with some frequently asked questions, right here.

That page also details the less controversial features designed to protect children in Messages, Siri, and Search. All of which will be released in a software update following the release of iOS 15, iPadOS 15, and macOS Monterey.